How Can We Help?

DataMesh FactVerse DFS

Overview

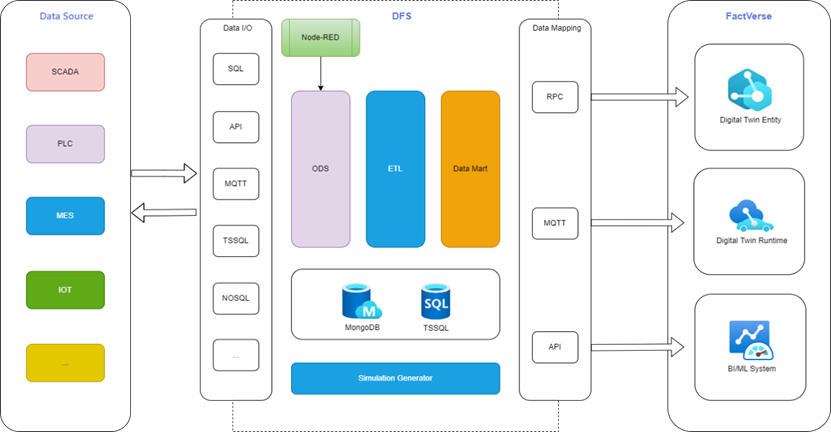

DFS (Data Fusion Services) is a fusion engine used to connect and process industrial data. It integrates and processes data from various industrial software for data analysis and business optimization. DFS supports the input of both simulated and real-time data, and can be associated with devices and digital twins, enabling data-driven simulation and optimization.

Main components

DFS Data Warehouse: The DFS Data Warehouse is responsible for overall management, data storage, statistical calculations, and reporting services. Its key features include:

- Connecting multiple DFS adapters with unified management and configuration.

- Providing storage services by connecting to storage hardware (e.g., hard disk arrays) and supporting external expansion.

- Using a dual-network-card design to separate access and output, enhancing security.

- Typically deployed in the form of rack-mounted servers in the user’s data center for on-premises deployments.

DFS Hub: The DFS Hub serves as the central point for connecting various adapters and the DFS system, managing data reception, processing, and distribution. Its key features include:

- Receiving external data into the DFS system and performing initial processing, transformation, and cleansing.

- Storing all data in the DFS Data Warehouse, as DFS adapters do not handle data storage directly.

- Supporting multiple DFS adapters within a single system, with each adapter linked to the DFS Data Warehouse.

- Offering compatibility with major communication protocols like RS485, MQTT, HTTP, Modbus, and OPC UA, enabling seamless integration with industrial software such as MES, PLM, WMS, and SCADA.

- For on-premises setups, DFS adapters are typically installed near devices or production lines and connect to the DFS Data Warehouse via wired or wireless networks.

Deployment

Key steps

Here are the key steps and connections involved in deploying DFS:

Here are the key steps and connections involved in deploying DFS:

- Connecting customer equipment to the data collection system: First, the customer’s equipment needs to be connected to the data collection system to gather data from the equipment and transfer it to the DFS system.

- Connecting the data collection system to DFS Hub:

- The DFS Hub serves as the central point connecting various adapters and the DFS system, handling data reception, processing, and distribution.

- The data collection system transmits the gathered data to DFS for further processing via the connection to the DFS Hub.

- Connecting DFS Hub to the DFS Data Warehouse: Data is then transmitted from the DFS Hub to the DFS Data Warehouse system. Within the DFS Data Warehouse, the Node-RED module is responsible for collecting data from sources into the ODS warehouse, cleaning the data through ETL, and removing any anomalies.

- Connecting DFS Data Warehouse to FactVerse: The processed data is imported into the Data Mart based on business requirements, with connections made between the ODS, ETL, and Data Mart through data mapping interfaces. The necessary data is selected and linked to the digital twin. This step ensures that the cleaned, business-relevant data is imported into FactVerse for creating the digital twin environment.

Cloud host deployment

Cloud host deployment is ideal for scenarios where real-time data is not critical. It allows for quick setup of DFS cloud hosts via marketplace images, along with configuration to connect to FactVerse services.

Advantage

- Fast Deployment: Using cloud marketplace images, the DFS environment can be set up quickly, saving valuable time.

- Flexibility: Cloud hosts offer customizable specifications and configurations to meet specific requirements.

Typical use cases

- Rapid Proof of Concept (POC): Enterprises looking to quickly test DFS compatibility and performance with existing systems can use cloud host deployment for POC testing.

- Scene Playback of Historical Data: DFS can be deployed on a cloud host to perform scene playback of historical data, simulating data conditions over specific time periods for analysis and testing.

- Simulation and Calculation Based on a Data Generator: When enterprises need to conduct simulations and calculations based on simulated data, they can deploy DFS on a cloud host and use a data generator to perform experiments and calculations.

On-premises deployment

On-premises deployment is ideal for scenarios with high real-time data requirements. It allows for the deployment of DFS Data Warehouse and DFS Hub all-in-one systems directly in the field environment.

Advantages

- Real-time Performance: On-premises deployment offers better control over data transmission and processing in real-time, making it suitable for scenarios that require high real-time data processing.

- Security: Private deployment enhances data security and privacy protection, meeting the enterprise’s data security needs.

- Control: Businesses have full control over the DFS environment and can customize and optimize it according to their specific needs.

Typical use cases

- On-Site Data Collection Platform Integration: In factory or on-site environments where real-time data collection and monitoring are needed, an on-premises deployment of the DFS environment can be set up to integrate and connect with the on-site data collection platform.

- Real-time Monitoring and Management of Production Environments: For scenarios requiring real-time monitoring and management of production environments, an on-premises deployment of the DFS environment can be set up to enable real-time processing and monitoring of production data.

- Large-Scale Data Analysis and Simulation: For scenarios involving big data analysis and simulation, an on-premises deployment of the DFS environment can be established to meet the demands of processing and computing large data volumes.

Typical use cases

DFS is suitable for a wide range of scenarios, including but not limited to the following:

- Simulation/Backtesting (Historical Data)

Description: Use data generators to create simulated or historical data sources, which are then mapped to digital twins for simulation and backtesting analysis.

Applications:

- In production environments, simulated data is generated with data generators to test and optimize production processes, as well as predict operational conditions.

- Historical data sources are used for backtesting analysis to understand trends and characteristics of past production data, providing a reference for future decision-making.

- Real-Time Connectivity for Business Insights

Description: Connect data acquisition platforms to transfer real-time data from on-site devices, sensors, or other data sources to DFS, which is then mapped to digital twins. This creates a real-time data pipeline that supports monitoring and application of digital twins.

Applications:

- In manufacturing, real-time sensor data is collected and transmitted to DFS, enabling live monitoring and management of production processes.

- The real-time data is mapped to digital twins to create a dynamic, real-time environment that helps businesses make proactive decisions through forecasting and optimization.

- Data Analysis and Computation

Description: Output cleaned datasets to BI, machine learning, and AI platforms for further analysis and deeper insights.

Applications:

- Cleaned and transformed datasets are provided to BI tools for data visualization, generating reports and charts that help businesses uncover trends and patterns in the data.

- The cleaned data is also provided to machine learning and AI platforms for predictive modeling, anomaly detection, and other advanced analytics, offering deeper insights and supporting informed decision-making.

Functional modules

DFS version 1.2 includes the following core functional modules that support data reception, conversion, management, and digital twin association, enabling data-driven simulation and optimization.

DFS Adapter

The DFS Adapter connects to external data sources and handles data preprocessing, conversion, and cleaning, making it suitable for storage and use within the DFS platform.

Adapter Template

Adapter templates define rules for data access, conversion, filtering, and more, ensuring consistency and efficiency in data processing.

Template Management: You can create, modify, or delete adapter templates, and define how data is accessed, processed, and output.

Adapter Instance

An adapter instance is a specific data access point created based on an adapter template, which processes data using the assigned template.

Data Source Management

The Data Source Management module helps configure and manage various types of data sources, including historical and simulation data sources.

Historical Data Source

Historical data sources store past operational data, enabling backtracking, trend forecasting, and anomaly detection.

Simulation Data Source

Simulation data sources generate virtual data to simulate real-world data flows, supporting testing and simulation analysis.

Digital Twin Association

The Digital Twin Association module links data from physical devices to digital twins, enabling data-driven simulation and optimization.

Scene Configuration

Scene Configuration is used to create and manage virtual scenes for digital twins, allowing visualization of device states and data linking.

- Scene Creation: Create digital twin scenes using FactVerse Designer.

- Scene Import: Import existing twin scenes from the FactVerse platform and bind them with data in DFS.

Device Binding

Device binding links physical devices to digital twins, ensuring that data can drive the virtual model’s operation.

Three ways to add device information:

- Import device details directly in the Device Binding

- Import device details when creating an adapter instance.

- Import device details when creating a simulation task.

Data-driven process

The process for implementing data-driven device interaction using DFS is outlined below:

- Import Digital Twin Scene:

- Select Data Source: (Historical Data, Simulation Data, Real-Time Data)

- Historical Data: Create simulation tasks using historical data from devices or scenes.

- Simulation Data: Upload device data to generate device information and create simulation tasks.

- Real-Time Data: Connect to external data sources, generate device information, and define data processing rules.

- Bind Devices to Digital Twins: Link physical devices to digital twins, allowing them to respond to data changes.

Import digital twin scene

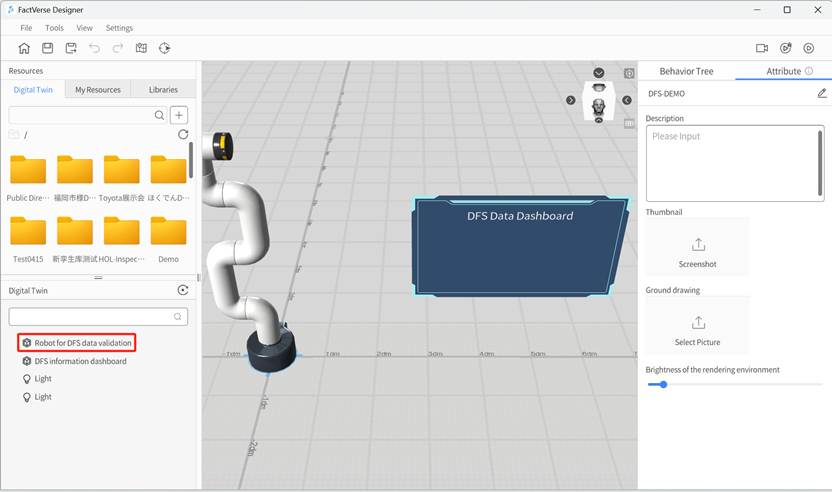

1. Create a digital twin scene: Use FactVerse Designer to create a digital twin scene, ensuring the scene includes digital twins corresponding to the actual devices.

Example: The scene includes a robotic arm (Robot for DFS data validation), which will later be bound to the actual device in the “Bind devices to digital twins” step.

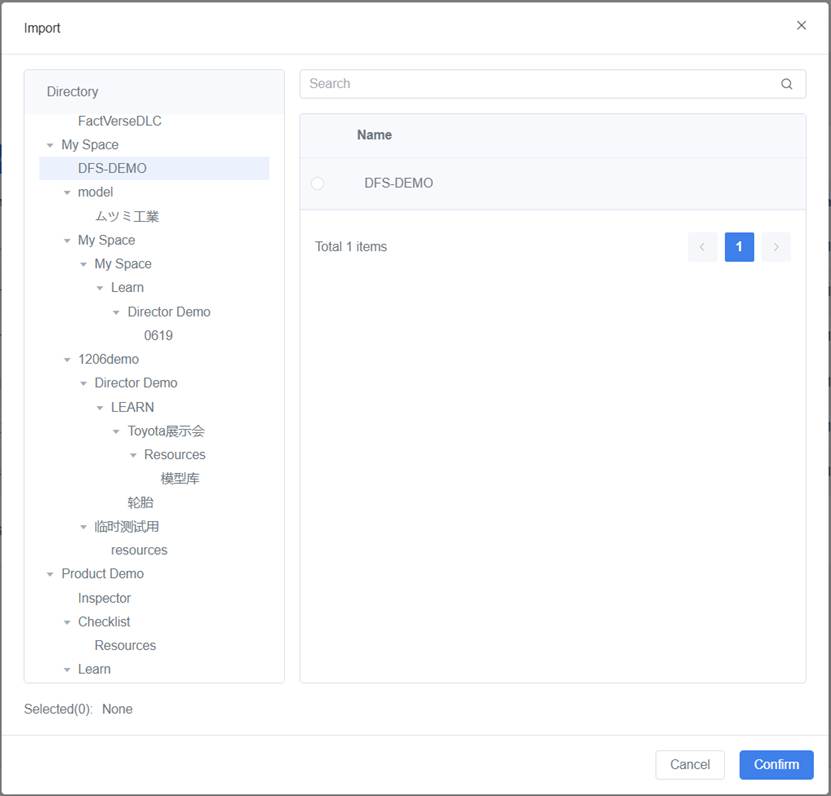

2. Import digital twin scene

a) Log in to the DFS Management Platform.

b) In the Digital Twin Association > Scene Configuration page, click Import to open the import window.

c) In the import window, select the scene to import (e.g., “DFS-DEMO”), and then click the Confirm button.

Create data source

Data sources are used to drive device interaction, and you can choose from historical data, simulation data, or real-time data.

Data source types

Historical Data: Used when there are historical records of a device or scene to simulate or validate device performance. This is ideal for analyzing past data trends to assess performance or predict future behavior.

Simulation Data: Used when there is no historical or real-time data available, typically during testing, validation, demonstrations, or development stages. Examples include:

- Device Testing: Simulate data for a device in a specific environment to validate its response or optimize configurations.

- Data Display or Validation: Use simulation data to display or validate scenes when historical or real-time data is unavailable.

- No Real-Time Device Data: If a device temporarily lacks real-time data, simulation data can be used for early-stage testing or development.

Real-Time Data: The device is operating online and provides a real-time data stream. Suitable for the following scenarios:

- Dynamic Monitoring: Requires real-time monitoring of device or system status, with real-time data driving digital twin updates.

- Real-Time Decision Support: Requires optimization and scheduling based on real-time data.

- Device Interconnection: Multiple devices need to coordinate with each other through real-time data, adjust settings, or respond to events.

Create historical data simulation task

Historical data sources can be used for data playback, simulation testing, and trend analysis, helping users simulate based on historical data from devices or scenes.

Steps

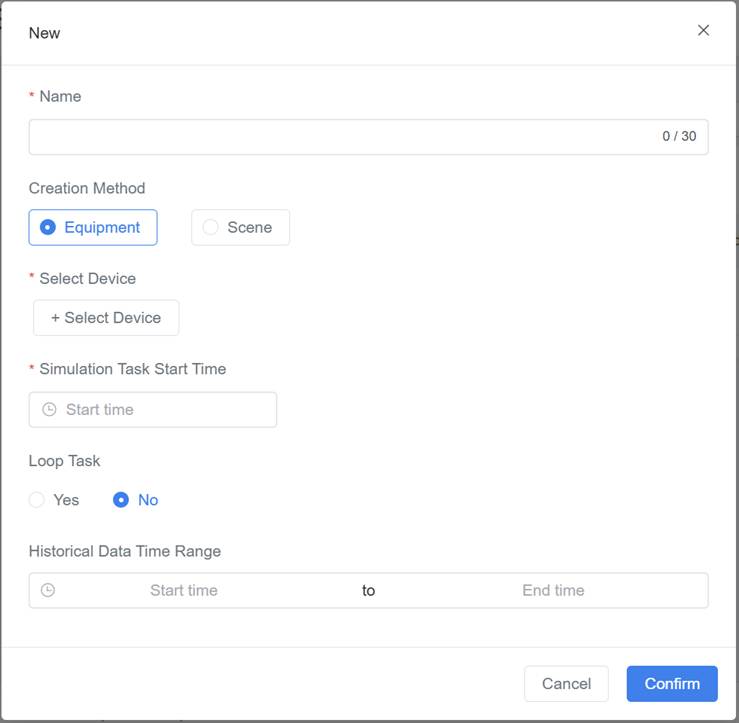

1. Create a new simulation task: In the Data Source Management > Historical Data Source page, click New Task to open the creation window.

2. Name the simulation task: Enter a name for the simulation task.

3. Select data (Based on device or scene):

- Device historical data: Select the Equipment option, and click + Select Device to select the device from the device list to be used for the simulation task.

- Scene historical data: Select the Scene option, and click + Choose the Scene to select the scene from the scene list to be used for the simulation task.

4. Set simulation task start time: Set the start time for the simulation task to define the time range for generating simulation data.

5. Choose whether to loop the simulation:

- Enable: The newly created simulation data source task will loop continuously, suitable for ongoing testing or long-term demonstration scenarios.

- Disable: The simulation data source task will only run once, suitable for single tests or validation scenarios

6. (Optional) Select historical data range: Choose the period for the historical data playback.

7. Confirm Creation: Click the Confirm button to complete the creation of the simulation task.

Create simulation data task

Simulation data is used to generate data streams when real device data is unavailable, supporting testing, validation, or demonstrations.

Steps

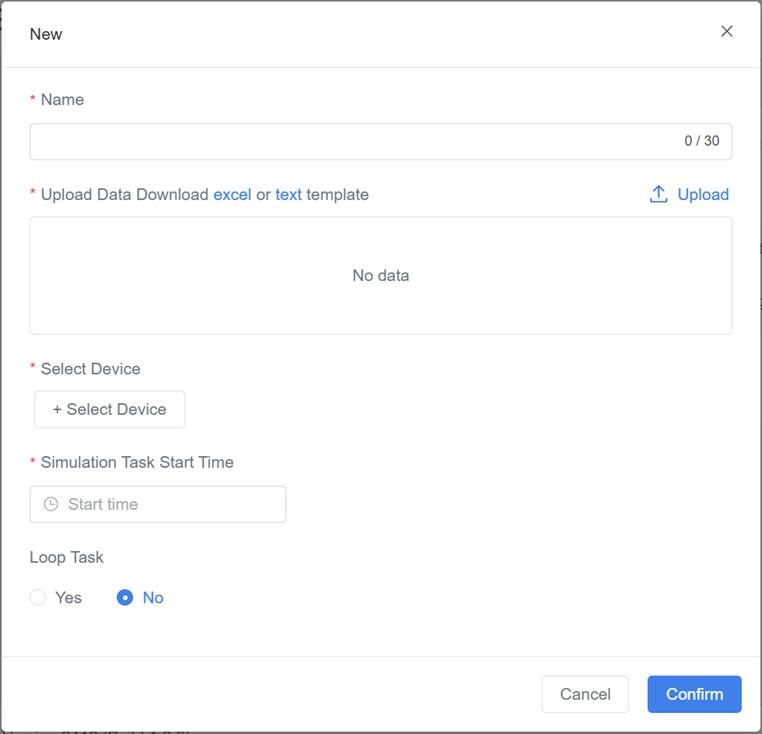

1. Create a new simulation task: In the Data Source Management > Simulation Data Source page, click New Task to open the creation window.

2. Enter simulation task information:

a) Name the simulation task: Enter a name for the simulation task.

b) Upload Data: This step imports device data and generates device information.

- Upload an existing data file: Choose a prepared data file to upload. Supported formats include Excel or Text files. Ensure the data file contains the relevant device attributes and that the format follows predefined standards so the system can correctly read and parse it.

- Download template and fill in data: If no data file is ready, you can download a predefined template (Excel or Text format). If the device supports data export, you can export data from the device system, fill in the template with the necessary data, and upload the file.

Data file template

[

{

"serial": "Device name",

"ts": "String or integer millisecond timestamp",

"datas": {

"key1": "data1",

"key2": "data2"

}

}

]

Template parameter details:

- serial: Device name.

- ts: Timestamp (in milliseconds).

- datas: Device attributes and their corresponding values:

- key: Attribute name

- data: Attribute value

Note: Device attribute names must match the attributes in the digital twin. If they don’t, you’ll need to manually bind the attributes during the “Bind devices to digital twins” step.

c) Select Device: Click + Select Device to select the device from the list to be used for the simulation task.

Note: The device selected here must be included in the uploaded data file. Only devices present in the data file can be used in the simulation task.

d) Set simulation task start time: Set the start time for the simulation task to define the time range for generating simulation data.

e) Choose whether to loop the simulation:

- Enable: The newly created simulation data task will loop continuously, suitable for ongoing testing or long-term demonstration scenarios.

- Disable: The simulation data task will only run once, suitable for single tests or validation scenarios.

3. Confirm task creation: Click Confirm to complete the creation of the simulation task.

Access real-time data

Real-time data can be accessed from external data sources. You need to configure the data access interface and data processing rules to ensure that the data is properly stored, processed, and applied once it flows into DFS.

Configure data access interface

In the DFS Management Platform, the data access interface must be set up to ensure that DFS can correctly receive data sent from the data acquisition system.

The steps are as follows:

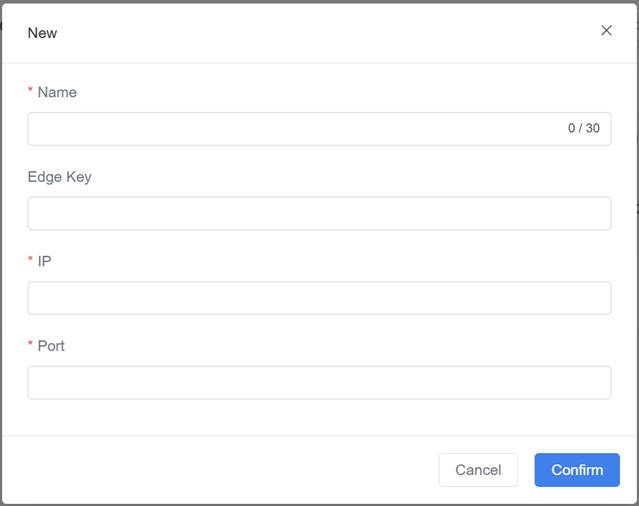

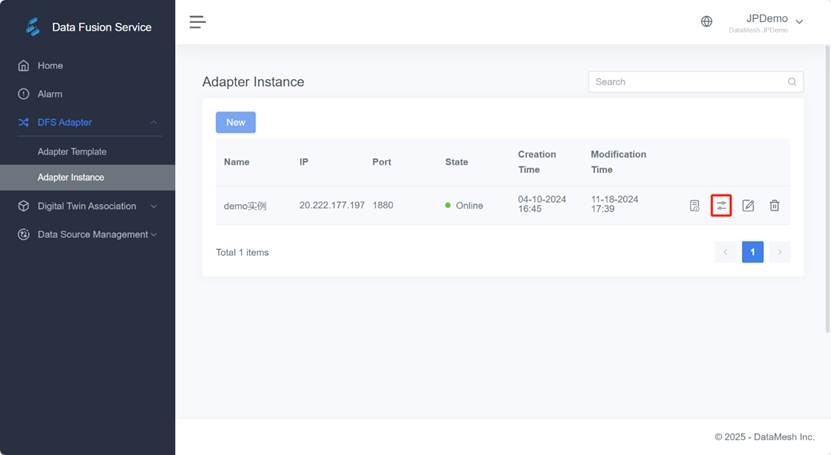

1. In the DFS Adapter > Adapter Instance page, click the New button to create a new adapter instance.

2. In the New window, enter the adapter instance name, DFS adapter IP address (the managed IP of the DFS adapter), and other relevant details.

IP address setup guidelines:

- For a single DFS adapter with multiple instances, use the same IP address but different port numbers.

- For multiple DFS adapters, use different IP addresses.

3. Once all information is entered, click Confirm.

Set data processing and transformation rules

In the DFS Management Platform, a series of data processing and transformation rules need to be set up to meet specific business requirements. These may include data cleaning, transformation, aggregation, calculation, etc., to ensure that the processed data aligns with user expectations.

Steps

1. In the DFS Adapter > Adapter Template page, click the New button to open the creation window.

2. In the New window, enter the template name and template data

3. Go to the Adapter > Adapter Instance page, click the Select Template button corresponding to the adapter instance, and select an adapter template to set the data processing rules for the adapter instance.

4. (Optional) Edit data processing and transformation rules in Node-RED:

a) Click the Edit Adapter button ![]() to open the Node-RED editing interface.

to open the Node-RED editing interface.

b) In the Node-RED editor, modify the nodes as needed.

Bind devices to digital twins

Binding digital twins to devices is the process of associating a physical device with a virtual object (digital twin) in the digital twin environment, enabling data-driven simulation and optimization.

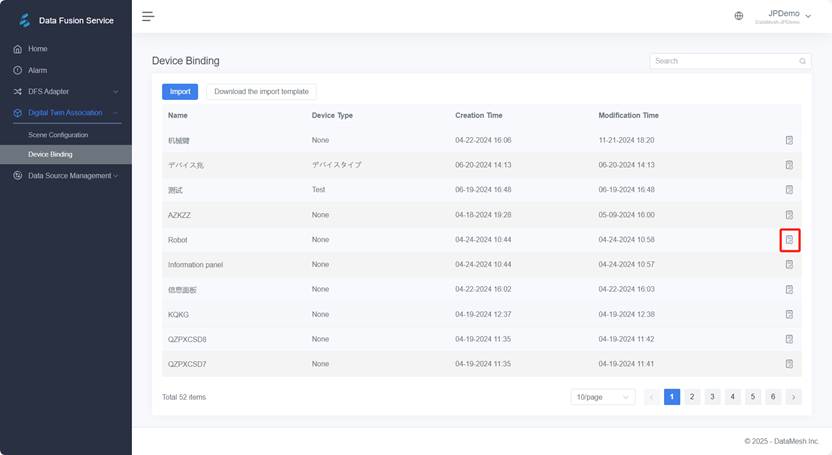

1. In the Digital Twin Association > Device Binding page, find the device to be bound, such as the device “Robot,” and click the corresponding details button to open the device details page.

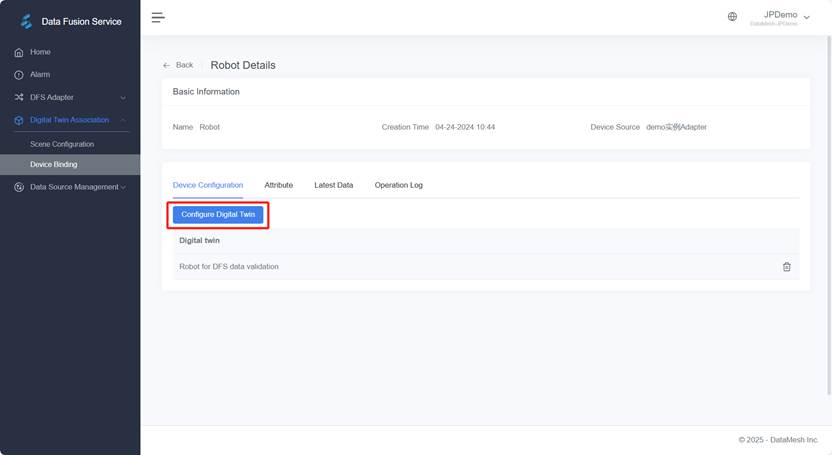

2. In the Device Configuration section, click the Configure Digital Twin button to open the digital twin configuration window.

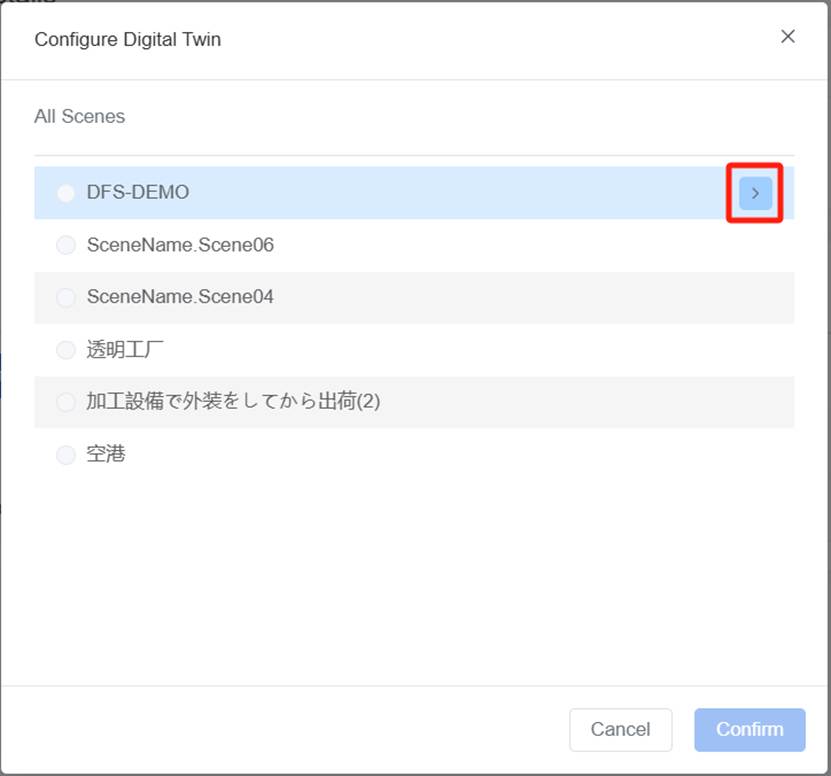

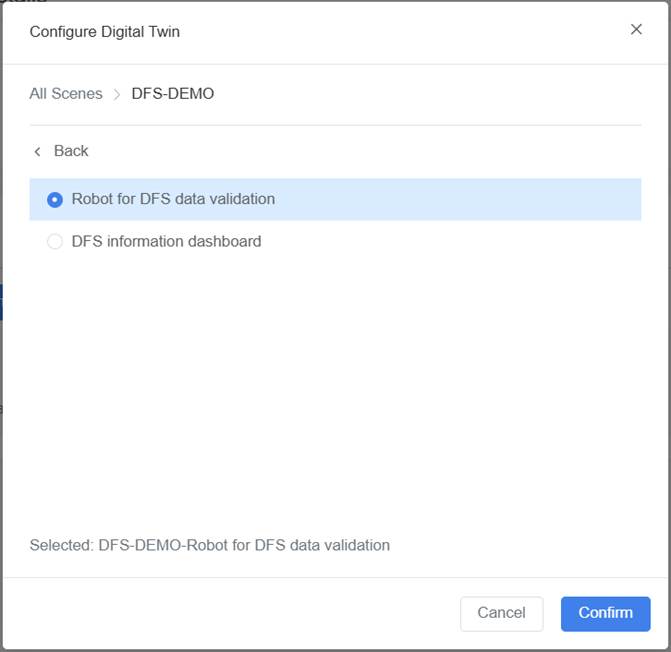

3. In the configuration window, click the next-level button for the scene the device belongs to.

4. Select the digital twin to bind, then click Confirm to complete the binding of the device to the digital twin.

5. Manually associate digital twin attributes with device attributes: This is applicable when the attribute names in the digital twin do not match the device attribute names and manual association is required.

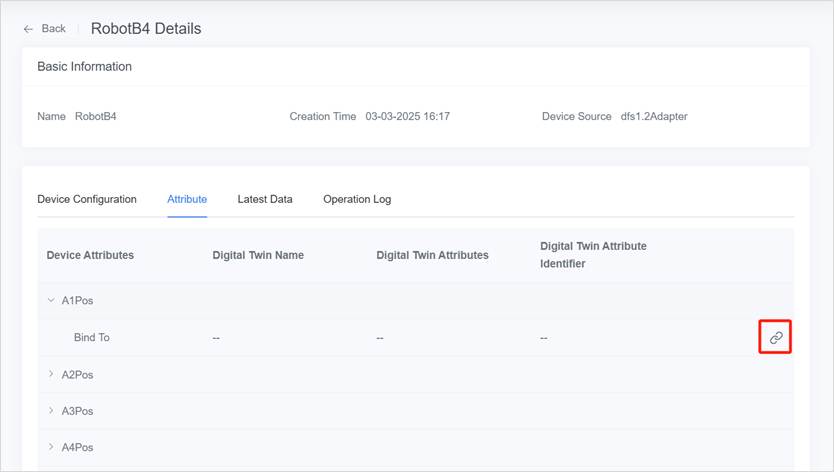

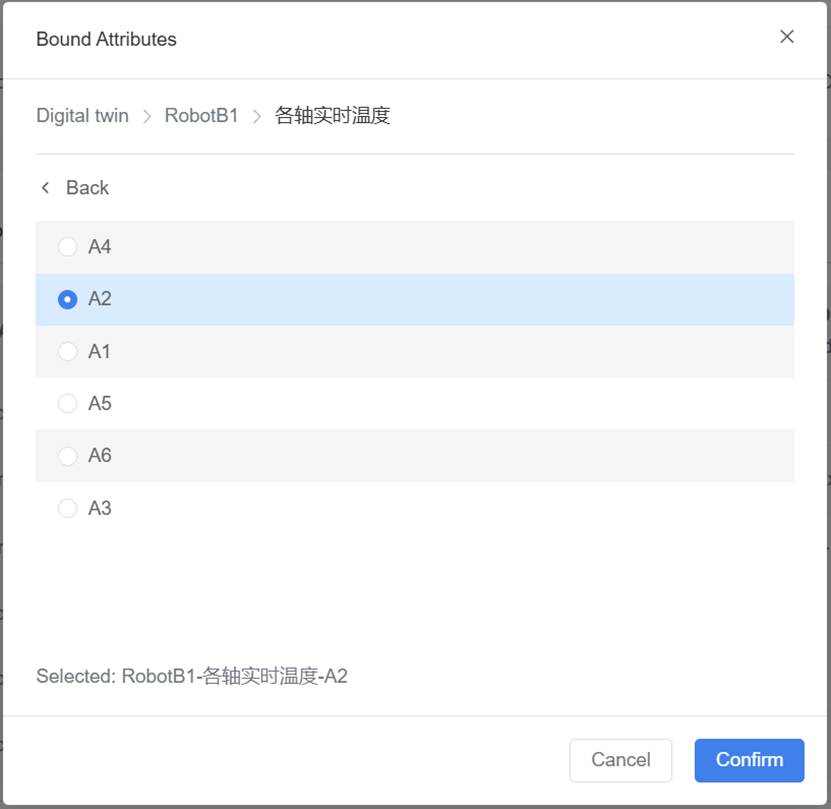

a) Click the Attributes tab and then click the Bind icon ![]() next to the device attribute.

next to the device attribute.

b) In the pop-up Bound Attributes window, select the attribute to associate and click Confirm.

Glossary

Data Fusion Services (DFS): FactVerse Data Fusion Services, used to continuously connect real-world data to FactVerse.

DFS Adapter: An adapter used by DFS to interface with various data sources and continuously acquire data.

DFS Hub: A server/hub that hosts multiple DFS adapters.

DFS Data Warehouse: A server that hosts various types of data storage, processing, and usage within DFS.

DFS ODS: The raw data storage service within the DFS Data Warehouse.

Node-RED: An open-source, flow-based, low-code visual programming tool for IoT, used in DFS for pre-ODS data rule design.

DFS ETL: The ETL service within the DFS Data Warehouse, is primarily used for continuous data extraction and cleaning.

DFS Data Mart: A post-processed data distribution service within the DFS Data Warehouse, used to provide processed data packages to business systems, reporting systems, and analytical learning platforms.